Turning Robot Video into Training Data

Robotics has a data problem. Annotation is the bottleneck, and it's still 100% manual. We're building infrastructure to turn raw, messy video into structured training data using visual AI.

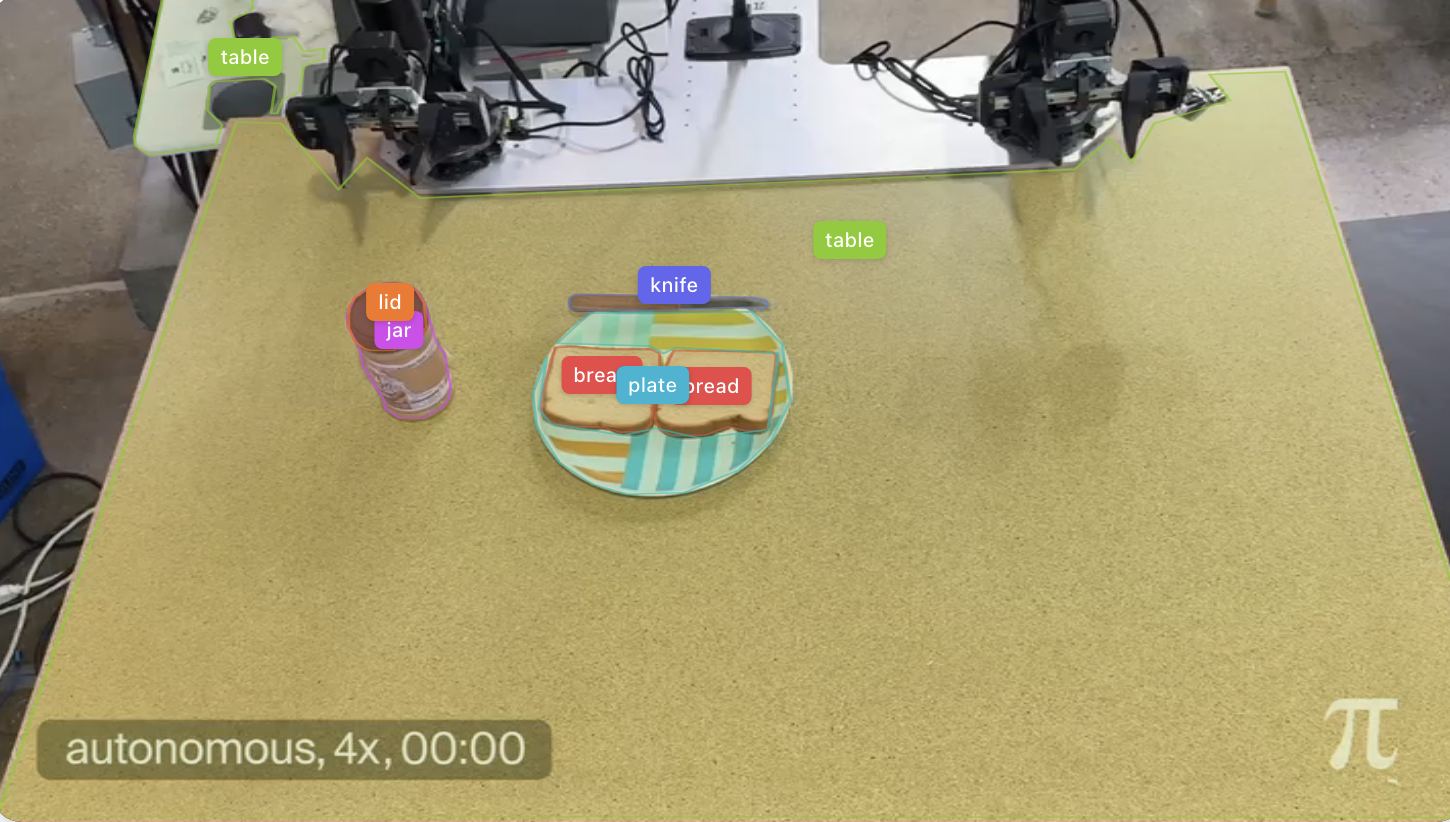

Figure 1: Our segmentation and object localization pipeline, which we use to run subtask segmentation, narration, and evaluation. Video source: Physical Intelligence (π).

TLDR: Robotics has a data problem. Annotation is the bottleneck, and it's still 100% manual. We're building infrastructure to turn raw, messy video into structured training data using visual AI. Current models aren't ready for this in production, but we're making fast progress on systems that are.

The Robotics Data Problem

Robotics is having a moment. Foundation models are getting impressive, hardware is improving, and investment is pouring in. But there's a bottleneck that doesn't get enough attention: data.

The best robotics companies don't just have the best models. They have the best pipelines for collecting and annotating data. That infrastructure creates real competitive advantages. And right now, the annotation piece is almost entirely human. It doesn't scale.

Many teams are tackling data collection through teleoperation and simulation. But once you have hours of robot video, someone still has to watch it, label it, score it, and turn it into something a model can learn from. That's slow, expensive, and limiting.

What We're Building

noetic is building visual understanding infrastructure for robotics. We're using AI to narrate and score robot video, turning terabytes of raw footage into meaningful, structured datasets through an API.

The goal isn't to replace human judgment entirely. It's to make annotation scalable so teams can actually use all the data they're collecting.

Why Off-the-Shelf Models Don't Work

Our first step was testing existing vision-language models on robotics data. The results weren't surprising: they're not ready for production annotation.

Context Limitations

Videos get cut off. Current models have hard limits on how much visual information they can process at once. For robotics, where tasks can span minutes and require tracking subtle changes over time, this is a real problem. Models also degrade in quality as they approach their context limits, even before hitting them.

Noise Sensitivity

Real robotics data is messy. Low resolution, motion blur, unusual camera angles, cluttered environments. Hosted models work reasonably well on clean, simple demos. But that's not what most teams are actually collecting. When you point these models at real-world data, hallucinations spike.

Task Complexity

For simple tasks lasting a few seconds, quality is decent. But the real value, and the real challenge, is in complex, long-horizon tasks. These are exactly the behaviors robots struggle to learn and desperately need annotated data for.

Our Progress

We've been testing on challenging robotics data, including episodes from Physical Intelligence's work on Moravec's Paradox. These are tasks that are intentionally difficult and imperfect. This lets us demonstrate not just narration, but evaluation: scoring how well a task was executed, identifying failure modes, and flagging moments that need attention.

Our demo is currently not publicly available. If interested, shoot us an email at founders@getnoetic.ai.

Narrations

6 segments

The left arm moves towards the jar, grasps its body, and lifts it slightly. Simultaneously, the right arm moves towards the jar, grasps the lid, and rotates it counter-clockwise to unscrew it. The right arm then lifts the lid off the jar, and the left arm places the jar back on the table. Finally, the right arm places the lid on the table to the left of the jar.

The left arm moves to the plate, grasps the knife, and lifts it. The right arm grasps the jar and lifts it off the table, tilting it towards the left arm. The left arm inserts the knife into the tilted jar, scoops out peanut butter, and then withdraws the knife from the jar.

While the right arm releases the jar and moves to hold the plate steady, the left arm moves the knife with peanut butter over a slice of bread. The left arm then spreads the peanut butter across the surface of the bread in several strokes.

The left arm places the knife on the plate, then grasps the second slice of bread and places it on top of the first slice to form a sandwich. The right arm releases the plate and grasps the knife from the plate.

The left arm, holding the knife, moves to position the knife over the center of the sandwich. The right arm moves in and presses down on the sandwich to hold it steady. The left arm then pushes the knife down through the sandwich, cutting it in half. After the cut, both arms lift away, and the left arm places the knife on the plate.

The right arm moves to the jar and grasps it to hold it steady. The left arm then moves to the lid, grasps it, lifts it, and places it on top of the jar. The left arm then rotates the lid clockwise, screwing it onto the jar. After the lid is tightened, the left arm releases it. The right arm then lifts the closed jar and places it on the table before both arms retract.

Figure 2: A preview of our demo's narration feature. Object segmentation, video segmentation, and action grading are also available.

How We Work with Customers

We know robotics teams are moving fast. We match that pace.

We work like forward-deployed engineers, collaborating directly with customers to integrate noetic into their pipelines. We're platform-agnostic and meet teams where they are. When something breaks or needs to change, we're on call to fix it.

This isn't a product you set up once and forget. We iterate alongside you.

What's Next

Multimodal reasoning is still early. Models can process space, time, and meaning, but combining and reasoning over these often fails. Reliable systems need explicit ways to catch and fix these failures. That's what we're focused on.

We're excited about where this is going. If you are too, let's talk.

Contact: founders@getnoetic.ai